AutoManner

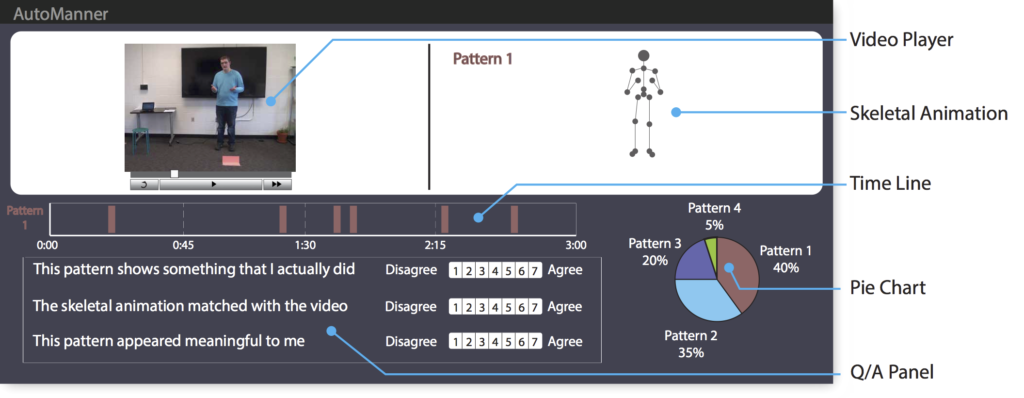

Many individuals exhibit unconscious body movements called mannerisms while speaking. These repeated changes often distract the audience when not relevant to the verbal context. We present an intelligent interface that can automatically extract human gestures using Microsoft Kinect to make speakers aware of their mannerisms. We use a sparsity-based algorithm to automatically extract the patterns of body movements. These patterns are displayed in an interface with subtle question and answer based feedback scheme that draws attention to the speaker’s body language. Our formal evaluation with 27 participants shows that the users became aware of their body language after using the system. In addition, when independent observers annotated the accuracy of the algorithm for every extracted pattern, we find that the patterns extracted by our algorithm is significantly more accurate than just random selection. This represents a strong evidence that the algorithm is able to extract human-interpretable body movement patterns.

More information is available in the following paper:

Tanveer, M. Iftekhar; Zhao, R.; Chen, K; Tiet, Zoe; Hoque, M. Ehsan Hoque, AutoManner: An Automated Interface for Making Public Speakers Aware of Their Mannerisms, ACM Intelligent User Interfaces (IUI), March 2016.

Demo of Automanner

An interactive demo is available here.

Unsupervised Extraction of Behavioral Cues

Automanner was implemented on top of an unsupervised behavioral cue extracting algorithm. Check out the following paper for more information on the algorithm:

Tanveer, M. Iftekhar; Liu, J.; Hoque, M. Ehsan, Unsupervised Extraction of Human-Interpretable Nonverbal Behavioral Cues in a Public Speaking Scenario, Proceedings of the 23rd Annual ACM Conference on Multimedia Conference. ACM, 2015.

Demo of the algorithm

Here are a few demos showing representative results. In the demo page, you’ll see an interface to analyze a public speech video. Click on the pie chart to observe the extracted patterns. Click on the time-instances (of same color) on the timeline to observe the part of the video where that specific pattern appeared.

Code

The AutoManner code is now released:

https://github.com/ROC-HCI/AutoManner

The AutoManner GUI code is now released:

https://github.com/ROC-HCI/AutoManner_GUI

Contributions appreciated.

AutoManner Dataset

AutoManner dataset is now ready for release. For getting a copy, please email to Dr. M. Ehsan Hoque (mehoque at cs dot rochester dot edu).