Personalizing LLMs to combat misinformation

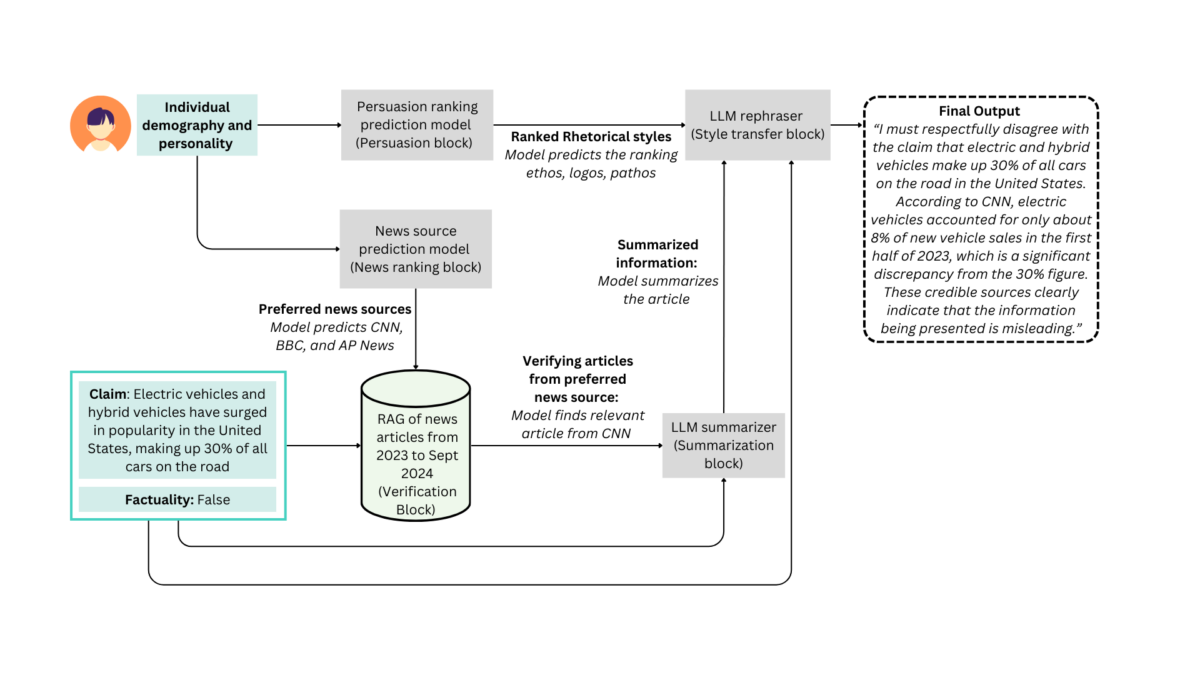

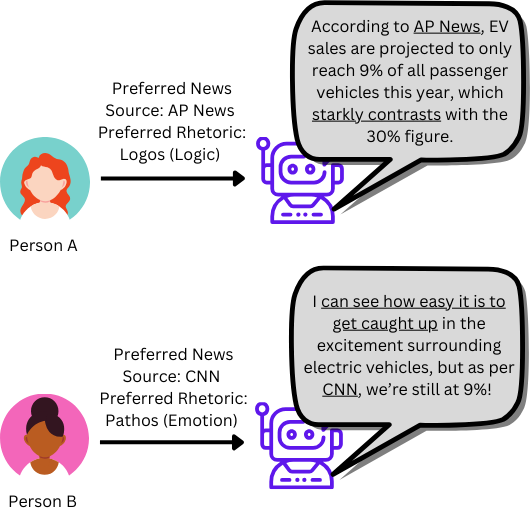

We design an LLM pipeline that can tailor information to user’s demography and personality to combat misinformation.

Despite various efforts to tackle online misinformation, people inevitably encounter and engage with it, especially on social media platforms. Recent advances in LLMs present an opportunity to develop personalized interventions to address misinformed beliefs, and potentially offer more effective approaches than existing non-tailored methods.

To tackle this problem, we design and evaluate personalized LLM agent that can consider users’ demographics and personalities to tailor responses to mitigate misinformed beliefs. Our pipeline is grounded in facts through an external Retrieval Augmented Generation (RAG) knowledge base and is able to generate diverse output as a result of the personalization, with an average cosine similarity of 0.538. Our pipeline scores an average rating of 3.99 out of 5 when evaluated by a GPT-4o-mini LLM judge for response persuasiveness.

Our methods can be adapted to design similar personalized agents in other domains.

Publications

A. Proma, N. Pate, J. Druckman, G. Ghoshal, and E. Hoque, “Personalizing LLM Responses to Combat Political Misinformation”, to appear in ACM UMAP 2025.